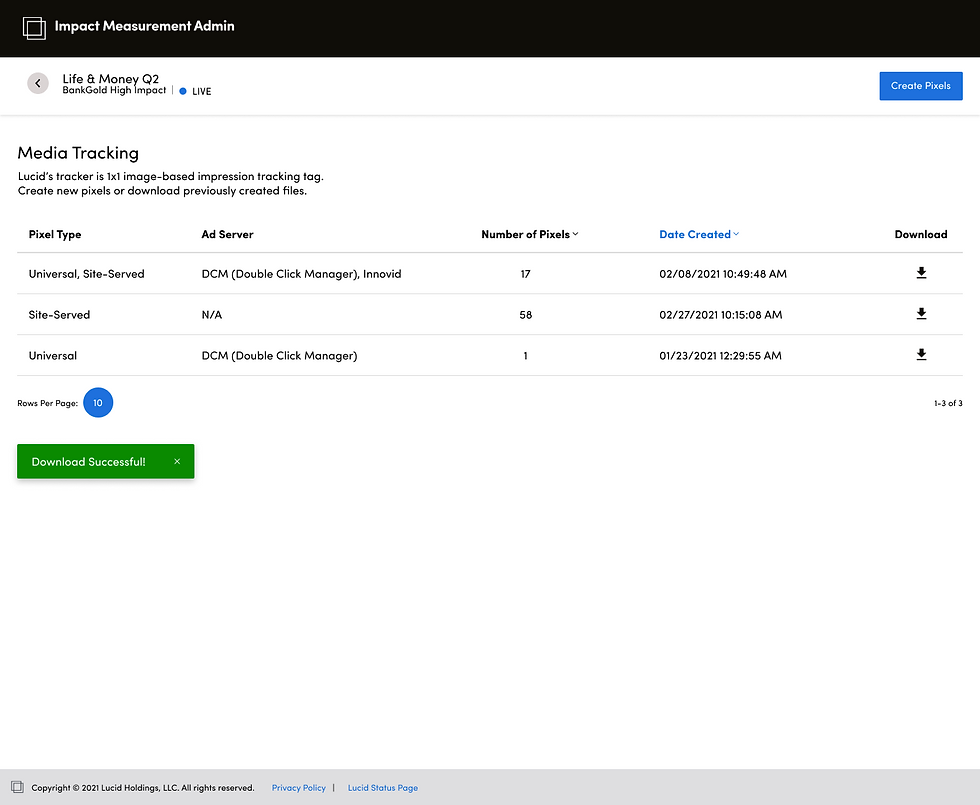

Tracking Tag Builder

Impact Measurement enables the measurement of digital campaign success and real-time optimization of media performance. They do this by using tags. Tags are snippets of code that are placed on web pages and are used to gather information about website visitors.

The Evolution of Impact Measurement

In 2019, I embarked on Lucid's initiative to develop the innovative product, Impact Measurement. Collaborating closely with a Product Manager and Engineer, we transformed an initial concept into reality within a nine-month timeframe, achieving market fit and orchestrating a successful launch. Post-launch, our focus shifted to transforming the product from a managed service to an automated SaaS solution. This case study delves into one such post-launch project journey.

The Challenge

This project sought to tackle the labor-intensive and error-prone nature of creating media tracking tags. Members of the product operations team face challenges in generating tracking tags as they are required to manually create impression tracking tags outside the product interface, leading to inefficiencies and potential mistakes.

Goals

-

Create a dedicated space within the Impact Measurement admin interface for building tracking pixels.

-

Reduce human errors in tag creation.

-

Increase speed and scalability for operations in creating tags.

Role & Collaboration

As Senior Product Designer, I led the design work for this project, collaborating with a multidisciplinary team including an Associate Product Designer, Product Manager, Project Manager, and Front-End and Back-End Engineers.

Process

We initiated this project with a "measure-build-learn" methodology, aiming to accelerate progress towards obtaining user feedback and tangible results. Collaborating closely with developers, we constructed an interactive prototype. This strategic choice stemmed from the Product Team's envisioned minimal viable product (MVP). By adopting this approach, we facilitated the testing and gathering of crucial data to validate our initial hypothesis.

Kickoff

The kickoff meeting convened essential stakeholders, comprising the Product Team, the Engineering Team, the Project Manager, and the Product Design Team, with the primary objective of defining the project's scope. During this session, our focus centered on outlining our proposed design process, employing the measure-build-learn methodology, to ascertain the components of the minimal viable product (MVP).

Digging through Existing Tools & Workflow

The Excel template utilized by the product operations team for generating tracking tags proved to be inefficient in several aspects:

Initial Concept Scope

We collaborated with the product team to develop an initial concept that aligned with the requirements for the Minimum Viable Product (MVP). Leveraging the "Lucidium" design system components, we crafted a mockup prototype aimed at expediting the turnaround time while mitigating potential technical debt down the line.

Initial Impression Evaluation

Utilized two scene-setting questions to gauge the users' initial impressions of the new feature and its location within the interface.

Usability Assessment through Narrative Scenarios

Employed two narrative scenarios, comprising both exploratory and specific tasks, to identify any design inconsistencies and usability issues within the interface and design content.

Impact Analysis on Internal Customer Satisfaction

Conducted interviews with each participant, consisting of five evaluative follow-up questions after completing each scenario. This aimed to discern the product attributes that have the most significant impact on internal customer satisfaction.

By structuring our testing plan in this manner, we aimed to gain comprehensive insights into the user experience and iteratively refine the product based on our findings.

Cumbersome

The template was cumbersome, requiring extensive horizontal and vertical scrolling due to its large size.

Unreliable

Manual inputs were prone to human error, compromising the accuracy and integrity of the tracking tags.

Time-consuming

The process of manually creating tracking tags within the Excel template was laborious and time-consuming, necessitating team members to navigate between various platforms.

User Flows & Pain Points

Our analysis centered around three key user flows: Universal Tag, Single Site-Served Tag, and Bulk Create Site-Served Tags. Through thorough evaluation, we assessed the severity of user pain points within each flow. Incorporating feedback from moderated testing sessions enabled us to pinpoint areas requiring our attention for enhancement. Notably, the bulk-create workflow exhibited nearly double the number of severe pain points compared to other flows, indicating it as the primary focus for improvement to better cater to our users' needs.

How Might We?

Having identified the focus area as Bulk Create Site-Served Flow, we initiated the ideation phase employing the "How Might We" method, which proved instrumental in uncovering valuable opportunities for enhancement.

Examples:

-

How might we automate this tedious process?

-

How might we enhance user confidence in correctly aligning bulk-added values?

-

How might we alleviate the stress associated with reviewing large bulk-add files?

-

How might we optimize the user flow for multiple bulk upload processes?

-

How might we implement safeguards to prevent the creation of broken tags by users?

-

How might we ensure users' success while maintaining consistency in design and functionality across previous pages?

Universal Tags

Universal tags are now generated automatically with just two clicks, significantly streamlining the process. Production operations team members can swiftly assess the preview of the generated tag by selecting a macro value and observing the highlighted preview, enhancing efficiency and accuracy in tag creation.

Site-served & Bulk Upload

With the implementation of CSV upload functionality, the system now automatically parses uploaded files and identifies the required macros to create tags. This feature simplifies the process for operations team members, allowing them to validate the tags being produced by cross-referencing values and pixel counts, ensuring accuracy and completeness.

A new alert handling system has been introduced to provide users with immediate feedback. When a user successfully uploads a CSV file, the system now checks for unmapped columns, providing alerts for any discrepancies. This proactive approach ensures that potential issues are addressed promptly, minimizing errors and improving overall data integrity.

Research Objectives and Testing Plan

To achieve our research objectives effectively, we formulated a comprehensive testing plan structured around three key components:

Enhancing Universal Tag Creation

A pivotal enhancement to the universal tag creation process involved empowering users to generate multiple tags seamlessly. This was achieved through the introduction of a user-friendly "card form" dedicated to tag creation. This approach facilitated the creation of multiple tags in a single session, streamlining the process and enhancing user efficiency.

Revamping Site-Served Bulk Upload Flow

The bulk upload functionality for site-served tags underwent a significant overhaul, transitioning from a cumbersome manual copy-paste procedure to an automated CSV parsing solution. This strategic redesign not only eradicated potential user errors but also drastically reduced the time required to bulk generate hundreds of tracking tags. By automating the process, users experienced heightened efficiency and accuracy in their tag management tasks.

Afterwards

Moving forward, our efforts will be directed toward enhancing the CSV upload process, leveraging advancements in technology to create seamless user experiences with minimal manual intervention. Our aim is to streamline this process further, ensuring effortless data management for our users.

Additionally, through iterative design and refinement of the tracking tag builder, Impact Measurement is dedicated to enhancing operational efficiency and minimizing errors. This iterative approach optimizes the user experience for our product operations team, providing them with a smoother and more effective platform for delivering services to our users.

Moderated Testing

We undertook remote usability tests involving five members of the product operations team to assess the initial concept's viability.

Testing Analysis

Utilizing a spreadsheet facilitated the identification of task success rates and behavioral patterns, while affinity mapping uncovered recurring themes.

Key Insights:

Cognitive Load

Users exhibited a high level of cognitive load, often experiencing confusion and relying heavily on contextual cues to accomplish tasks effectively.

Flow Challenges

Navigating the design proved to be challenging for users, leading to general confusion and instances where certain testing tasks couldn't be completed successfully.

Output Quality Assurance

Users frequently examined the tracking tag in the preview box but tended to perform additional steps to ensure the validity of input and output.